In his session at NGINX Conf 2018, Timo Stark, Web developer and Web Solution Architect at Audi, shares how the automobile company went from 0 to 60 on its journey to microservices. In less than a year, his team built the Audi Cockpit, a dashboard on which Audi employees can access all the internal apps they use in their work. Timo details how NGINX Plus serves as API gateway for the dashboard, which uses microservices hosted on AWS in containers that are managed with Kubernetes.

In his session at NGINX Conf 2018, Timo Stark, Web developer and Web Solution Architect at Audi, shares how the automobile company went from 0 to 60 on its journey to microservices. In less than a year, his team built the Audi Cockpit, a dashboard on which Audi employees can access all the internal apps they use in their work. Timo details how NGINX Plus serves as API gateway for the dashboard, which uses microservices hosted on AWS in containers that are managed with Kubernetes.

In this blog we highlight some key takeaways.

You can watch the complete video here:

Key Takeaways

- Never store a refresh token on the client side. It’s like including an unencrypted user ID and password in a request header. Timo wrote a microservice that takes advantage of NGINX Plus’s JavaScript module to handle this.

- Before you deploy an API gateway, define what Timo calls a “macro‑design” for your APIs. In particular, decide which element in request URIs indicates the service to which the request is routed.

- When using HTTPS, enable Server Name Indication (SNI). Otherwise, the Kubernetes Ingress controller can’t see the hostname to which the client has sent its request.

- Use the nginx-asg-sync integration software to load balance AWS Auto Scaling groups using NGINX Plus. This tool monitors the upstream services in AWS Auto Scaling groups and configures them dynamically without the need for process restarts.

Supporting Multiple Authentication and Authorization Schemes

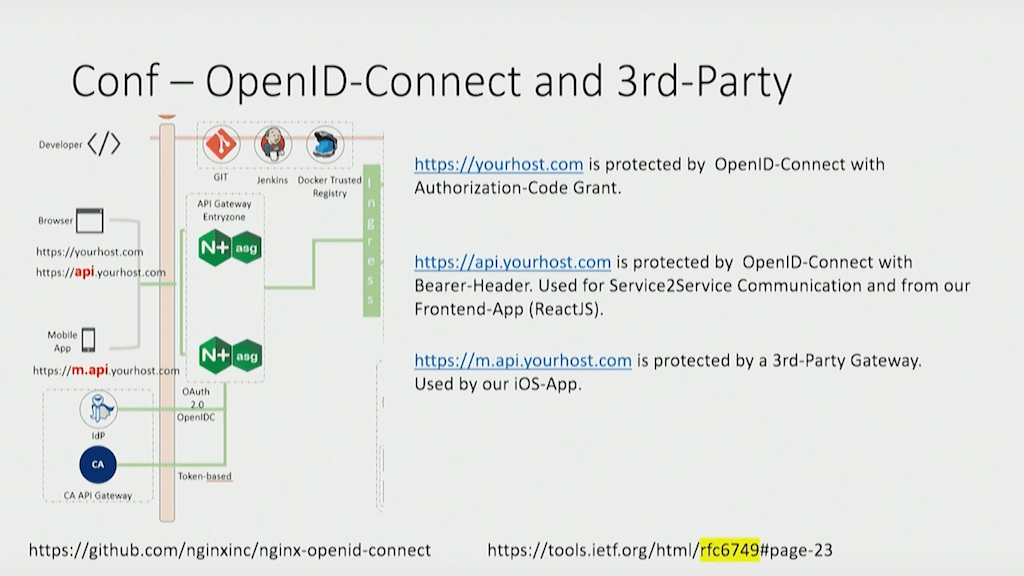

The goal was to make the Audi Cockpit lightweight, fast, stable, flexible, and most importantly secure. The last was a special challenge because for simplicity and stability requests to the backend apps need to look the same regardless of how the client accessed the app. But the Cockpit supports three distinct access methods, each with its own URL and authentication/authorization scheme:

- A frontend browser app written in React.js provides access to https://yourhost.com. Protected with OpenID Connect authorization code grant [also called authorization code flow].

- APIs access https://api.yourhost.com. Protected with OpenID Connect bearer tokens.

- Mobile devices access https://m.api.yourhost.com. Protected with CA Technologies API Gateway (which doesn’t support OpenID Connect) and tokens from identity provider Keycloak.

As Timo explains, using NGINX Plus as the API gateway is what makes it possible to support three different schemes and make them opaque to the backend services: “The intelligence is here inside the NGINX API gateway of creating [the] header field, creating new tokens, creating a structure our APIs in the backend can understand, regardless where the user comes from.”

Properly Handling Refresh Tokens

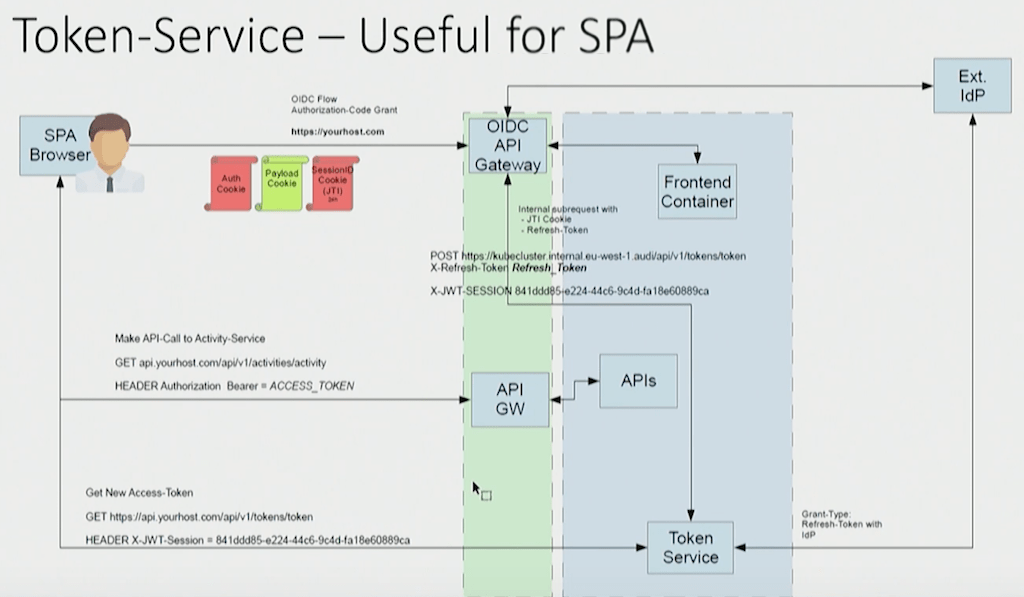

The frontend browser presented another security challenge. For optimum security, the access tokens provided to browser users expire after 5 minutes. But for the best user experience, you don’t want users to have to log in again every 5 minutes, or even for the browser page to refresh. To support these conflicting requirements, the Audi Cockpit uses refresh tokens, which have a 12‑hour lifetime and can be used to obtain a new access token when the current one expires.

But as Timo stresses, “it’s never a good idea to store a refresh token on the client side… With a refresh token, you can re‑create as many access tokens as you want for the period that the refresh token is valid. So [storing it on the client side] is like storing username and password in cleartext on the client side in a cookie. You will never do this.”

To solve the problem, Timo created a token service. Using the NGINX JavaScript module, NGINX Plus makes an internal subrequest for a new access token by sending the client’s refresh token to a backend microservice that Timo wrote and which obtains access tokens from the external identity provider.

Defining a “Macro-Design” for APIs

Timo underlines the importance of defining what he calls a “macro‑design” for your APIs before deploying them. Specifically, decide which element in request URLs determines how the Kubernetes Ingress controller routes the request to a backend application. For the Audi Cockpit, it’s the fourth element in the URL, highlighted in orange in these examples:

https://api.yourhost.com/api/v1/activities/activity?page=25

https://m.api.yourhost.com/apiv1/tokens/

Subsequent elements, like activity?page=25 for example, are parameters provided to the application itself.

Enabling SNI

The rule for request routing in the Kubernetes Ingress controller for the Audi Cockpit is based on the hostname (yourhost.com, api.yourhost.com, and m.api.yourhost.com). Because Audi uses TLS to protect traffic along the entire journey from the client to the backend server, the server hostname in a request is encrypted and the Kubernetes Ingress controller cannot see it unless SNI (described in RFC 6066) is enabled. SNI is enabled by default in many Ingress controllers, including the NGINX and NGINX Plus Ingress Controllers for Kubernetes, but you do need to include a TLS certificate in the Ingress configuration:

tls:

hosts:

api.yourhost.com

secretName: tls-certificate-nameAutoscaling App Instances in AWS

The Audi Cockpit takes advantage of the NGINX Plus nginx-asg-sync package to monitor the AWS Auto Scaling groups for backend applications and dynamically adjust the number of Kubernetes workers in response to demand. Using the package enabled Timo to eliminate the AWS Network Load Balancer (NLB) previously used for this purpose.

For all the details and more tips on developing microservices applications, watch the complete video:

Want to try NGINX Plus as an API gateway, load balancer, reverse proxy, or web server? Start your free 30-day trial today or contact us to discuss your use cases.