For many retailers, Thanksgiving is a time of trepidation and anticipation. While much of the rest of the country relaxes with family, both brick‑and‑mortar and online retailers brace themselves for the onslaught of Black Friday and Cyber Monday.

The challenges confronting both types of retailers are very similar. Facing massive visitor traffic to their stores, they must ensure every potential customer is welcomed in, given space to browse, and that the staff and resources are on hand to help them complete their transaction. Shoppers have plenty of choices, but are tight for time on these critical days. If one store appears overcrowded and slow to serve them, it’s easy to move next door.

The challenges confronting both types of retailers are very similar. Facing massive visitor traffic to their stores, they must ensure every potential customer is welcomed in, given space to browse, and that the staff and resources are on hand to help them complete their transaction. Shoppers have plenty of choices, but are tight for time on these critical days. If one store appears overcrowded and slow to serve them, it’s easy to move next door.

In this article, I’ll share four ways you can use NGINX and NGINX Plus to prepare for massive increases in customer traffic.

NGINX Powers Over 40% of the World’s Busiest Websites

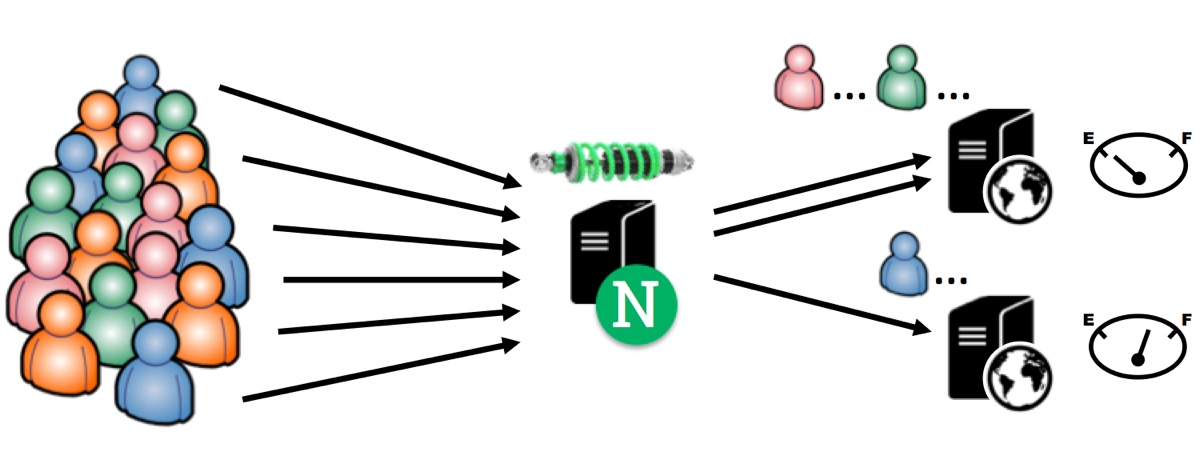

NGINX began as a web acceleration solution, and is now used by over 40% of the world’s busiest websites to load balance and scale. A great analogy is that NGINX and NGINX Plus act as an ‘Internet shock absorber’ – they enable your website to run faster and more smoothly across rough terrain. Learn More…

Step 1: Deploy the Shock Absorber

Think of NGINX and NGINX Plus as a gatekeeper, managing traffic at the front of your store. They gently queue and admit each shopper (HTTP request), transforming the chaotic scrum on the sidewalk into a smooth, orderly procession in the store. Visitors are each given their own space and gently routed to the least‑busy area of the store, ensuring that traffic is distributed evenly and all resources are equally used.

NGINX and NGINX Plus employ a range of out‑of‑the‑box techniques to achieve this. HTTP offload buffers slow HTTP requests and admits them only when they are ready. Transactions complete much more quickly when they originate from NGINX or NGINX Plus (on the fast local network) than when they originate from a distant client. Optimized use of HTTP keepalive connections and careful load balancing result in optimized traffic distribution and maximally efficient use of server resources.

Step 2: Employ Caching

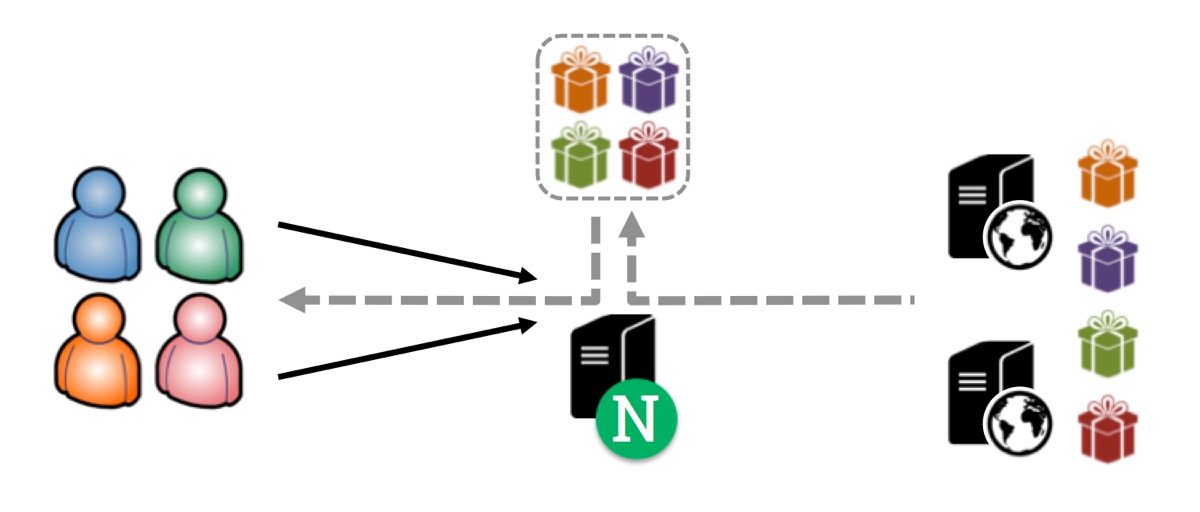

Click‑and‑collect, online reservations for in‑store pickup and even customer checkout (for example, Apple’s EasyPay) reduce the time that customers need to spend in a physical store and increase the likelihood of a successful transaction.

Content caching with NGINX and NGINX Plus has a similar effect for web traffic. Common HTTP responses can be stored automatically at the NGINX or NGINX Plus edge; when several site visitors try to access the same web page or resource, NGINX or NGINX Plus can respond immediately and do not need to forward each request to an upstream application server.

Depending on your application, content caching can reduce the volume of internal traffic by a factor of up to 100, reducing the hardware capacity you need to serve your app.

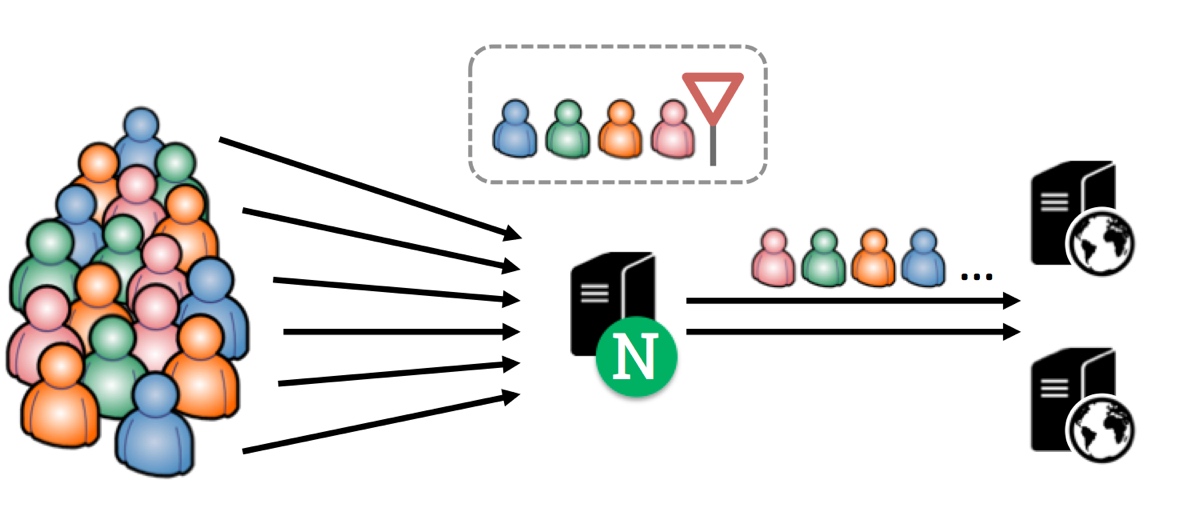

Step 3: Control Visitor Traffic

At the busiest times, a gatekeeper in front of your store might need to restrict the traffic coming through the door. Perhaps it’s a safety issue – the store is dangerously overcrowded – or perhaps you’ve set up ‘VIP‑only’ shopping hours when only customers with the right dress code, store card, or invitation can enter.

Similar measures are sometimes necessary for web applications. You limit traffic to ensure that each active request has access to the resources it needs, without overwhelming your servers. NGINX and NGINX Plus offer a range of methods for enforcing such limits to protect your applications.

Concurrency limits restrict the number of concurrent requests forwarded to each server, to match the limited number of worker threads or processes in each. Rate limits apply a per‑second or per‑minute restriction on client activities, which protects services such as a payment gateway or complex search.

You can differentiate between different types of clients if necessary. Perhaps the delivery area for your store does not extend to Asia, or you want to prioritize users who have items in their shopping baskets. With NGINX and NGINX Plus, you can use cookies, geolocation data, and other parameters to control how rate limits are applied.

Step 4: Specialize Your Store

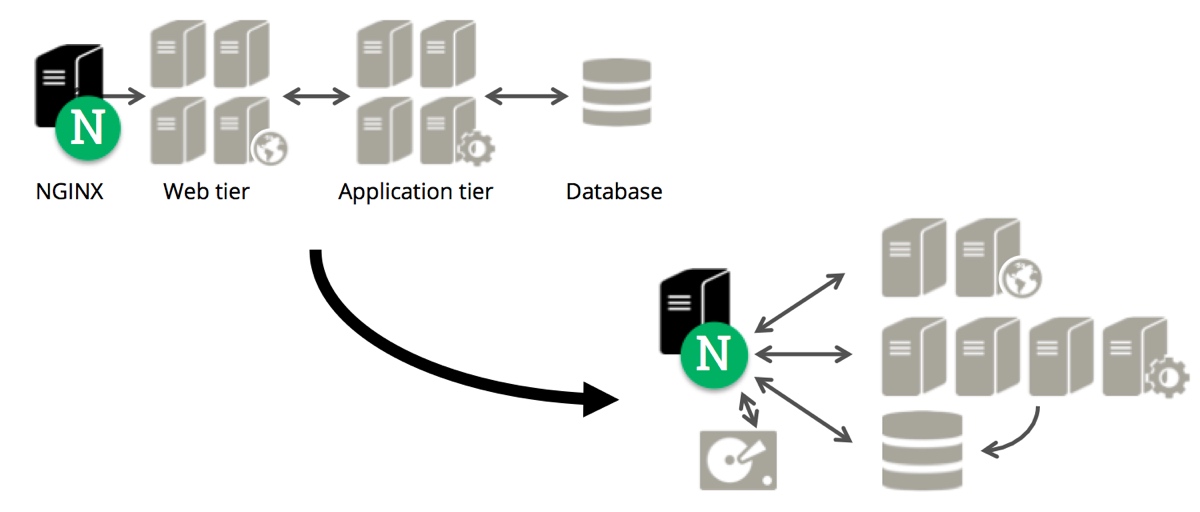

Large department stores and supermarkets are experts in maximizing revenue through clever store design. They partition the floor space into themes, use concession stores, and strategically locate impulse buys throughout the store. They can respond quickly to changes in customer demand or to new promotions, reconfiguring their layout in hours.

This partitioning can also be applied to website infrastructure. Traditional application architecture with its three monolithic tiers (web, app, and database) has had its day. It’s proven to be inflexible, hard to update, and difficult to scale. Modern web architectures are settling around a much more distributed architecture made up of composable HTTP‑based services that can be scaled and updated independently.

This approach requires a sophisticated traffic management solution to route and control traffic, and offload tasks where possible to consolidate and simplify. This is where NGINX Plus excels – it’s a combination load balancer, cache, and web server in a single high‑performance software solution.

Not only can NGINX and NGINX Plus route and load balance traffic like an application delivery controller (ADC), they can also interface directly with application servers using protocols such as FastCGI or uWSGI, and offload lightweight tasks, such as serving of static content, from your backend applications.

Deploying NGINX and NGINX Plus to Improve Performance

NGINX and NGINX Plus are a software reverse proxy, which makes initial deployment simple and enables a gradual migration of your application architecture to the distributed approach described above.

NGINX is the market‑leading open source web accelerator and web server used by over 40% of the world’s busiest websites. NGINX Plus is the commercially supported solution with extended load balancing, monitoring, and management features. To find out more, compare NGINX and NGINX Plus features or try NGINX Plus for free.

Use NGINX and NGINX Plus to deliver your web properties this shopping season and relax, confident in the knowledge that you’re ready for whatever the market sends your way.