We are happy to announce release 1.8.0 of the NGINX Ingress Controller for Kubernetes. This release builds upon the development of our supported solution for Ingress load balancing on Kubernetes platforms, including Red Hat OpenShift, Amazon Elastic Container Service for Kubernetes (EKS), the Azure Kubernetes Service (AKS), Google Kubernetes Engine (GKE), IBM Cloud Private, Diamanti, and others.

With release 1.8.0, we continue our commitment to providing a flexible, powerful and easy-to-use Ingress Controller, which you can configure with both Kubernetes Ingress Resources and NGINX Ingress Resources:

- Kubernetes Ingress resources provide maximum compatibility across Ingress Controller implementations, and can be extended using annotations and custom templates to generate sophisticated configuration.

- NGINX Ingress resources provide an NGINX‑specific configuration schema, which is richer and safer than customizing the generic Kubernetes Ingress resources.

Release 1.8.0 brings the following major enhancements and improvements:

- Integration with NGINX App Protect – NGINX App Protect is the leading NGINX‑based application security solution, providing deep signature and structural protection for your web applications.

- Extensibility for NGINX Ingress resources – For users who want to use NGINX Ingress resources but need to customize NGINX features that the VirtualServer and VirtualServerRoute resources don’t currently expose, two complementary mechanisms are now supported: configuration snippets and custom templates.

- URI rewrites and request and response header modification – These features give you granular control (adding, removing, and ignoring) over the request and response headers that are passed to upstreams and then the ones that are passed back to the clients.

- Policies and IP address access control lists – With policies, traffic management functionality is abstracted within a separate Kubernetes object that can be defined and applied in multiple places by different teams. Access control lists (ACLs) are used to filter incoming and outgoing network traffic flowing through the NGINX Ingress Controller.

-

- A readiness probe

- Support for multiple Ingress Controllers in VirtualServer and VirtualServerRoute resources and Helm charts

- Status information about VirtualServer and VirtualServerRoute resources

- Updates to the NGINX Ingress Operator for Red Hat OpenShift

What Is the NGINX Ingress Controller for Kubernetes?

The NGINX Ingress Controller for Kubernetes is a daemon that runs alongside NGINX Open Source or NGINX Plus instances in a Kubernetes environment. The daemon monitors Kubernetes Ingress resources and NGINX Ingress resources to discover requests for services that require Ingress load balancing. The daemon then automatically configures NGINX or NGINX Plus to route and load balance traffic to these services.

Multiple NGINX Ingress controller implementations are available. The official NGINX implementation is high‑performance, production‑ready, and suitable for long‑term deployment. We focus on providing stability across releases, with features that can be deployed at enterprise scale. We provide full technical support to NGINX Plus subscribers at no additional cost, and NGINX Open Source users benefit from our focus on stability and supportability.

What’s New in NGINX Ingress Controller 1.8.0?

Integration with NGINX App Protect

As more businesses push for accelerating digital transformation, attacks against applications are increasing. New vulnerability attacks with increased complexity are appearing on a daily basis. As an intelligent web application firewall (WAF), NGINX App Protect can effectively mitigate malicious attacks at high speeds, unlike network firewalls and anti‑virus solutions.

Release 1.8.0 embeds NGINX App Protect within the NGINX Ingress Controller, and you use the familiar Kubernetes API to manage App Protect security policies and configuration.

The consolidated solution brings three unique benefits:

- Securing the application perimeter – In a well‑architected Kubernetes deployment, the Ingress Controller is the only point of entry for data‑plane traffic to services running within Kubernetes, making it an ideal location to deploy a security proxy.

- Consolidating the data plane – Embedding the WAF within the Ingress Controller eliminates the need for a separate WAF device. This reduces complexity and cost, and reduces the number of points of failure.

- Consolidating the control plane – Managing WAF configuration with the Kubernetes API makes it significantly easier to automate CI/CD processes. NGINX Ingress Controller configuration is compliant with Kubernetes role‑based access control (RBAC) practices, so the WAF configuration may be delegated securely to a dedicated DevSecOps team.

For more detailed information on configuring and troubleshooting NGINX App Protect in NGINX Ingress Controller, see the Ingress Controller documentation. For information about other App Protect use cases, see the NGINX App Protect documentation.

You must have subscriptions for NGINX Plus and NGINX App Protect to build App Protect into the NGINX Ingress Controller image.

Extending NGINX Ingress Resources with Snippets and Custom Templates

In previous releases, you could insert NGINX configuration into standard Kubernetes Ingress resources using snippets and custom templates. Snippets allow you to assign NGINX‑native configuration to most NGINX configuration contexts. Custom templates allow you to generate other blocks of the configuration, such as the default server, and apply them with ConfigMaps. Release 1.8.0 extends the use of snippets and custom templates to the NGINX Ingress resources, VirtualServer and VirtualServerRoute.

Both snippets and custom templates enable admins to implement configurations for use cases and functionality that is not exposed through either standard Kubernetes Ingress resources or NGINX Ingress resources. One particularly important use case is when modernizing non‑Kubernetes applications which are already proxied by NGINX by migrating them to Kubernetes – with snippets and custom templates you can continue using NGINX features that are not yet implemented in the NGINX Ingress resources, such as caching and rate limiting.

This sample configuration shows how to configure caching and rate limiting with snippets that apply different settings in different NGINX configuration contexts:

apiVersion: k8s.nginx.org/v1

kind: VirtualServer

metadata:

name: cafe

namespace: cafe

spec:

http-snippets: |

limit_req_zone $binary_remote_addr zone=mylimit:10m rate=1r/s;

proxy_cache_path /tmp keys_zone=one:10m;

host: cafe.example.com

tls:

secret: cafe-secret

server-snippets: |

limit_req zone=mylimit burst=20;

upstreams:

- name: tea

service: tea-svc

port: 80

- name: coffee

service: coffee-svc

port: 80

routes:

- path: /tea

location-snippets: |

proxy_cache one;

proxy_cache_valid 200 10m;

action:

pass: tea

- path: /coffee

action:

pass: coffeeIt’s important to quality‑check snippets and custom templates carefully: an invalid snippet or template prevents NGINX from reloading its configuration. While traffic processing is not interrupted in this case (NGINX continues running with the existing valid configuration), additional mechanisms are available to increase stability:

- A global enable‑disable setting for snippets.

- Typically only administrators can apply ConfigMaps, which are the only mechanism for configuring custom templates.

URI Rewrites and Request and Response Header Modification

Release 1.8.0 introduces URI rewriting and modification of request and response headers, on both upstream and downstream connections. This essentially decouples end users from the backend by adding a filtering and modification layer. This functionality is now available directly in the VirtualServer and VirtualServerRoute resources and is configurable for specific paths.

Being able to rewrite URIs and modify request and response headers lets you quickly address unforeseen reliability issues in production environments without requiring backend developers to troubleshoot or rewrite code. That in turn increases the availability, security, and resilience of your application workloads in Kubernetes.

Typical use cases include:

- URI rewrites – An administrator might want to publish an application on a path different from the one exposed by the application code. For example, you can expose an application externally at the /coffee URI and have the Ingress Controller translate requests to the root (/) URI where the application backends are listening.

- Caching – Application developers don’t always set caching headers, correctly or at all. The administrator can use header modification to patch the headers in a deployed application.

You configure header modifications and URI rewrites in a VirtualServer or VirtualServerRoute under the proxy action, where requests are passed to an upstream.

In this sample configuration, when the Ingress Controller proxies client requests for the /tea path, it modifies the Content-Type request header before forwarding the request to the upstreams. It also rewrites the /tea URI to / as specified in the rewritePath field. Before passing the server’s response back to the client, the Ingress Controller adds a new Access-Control-Allow-Origin header and hides, ignores, and passes several others.

apiVersion: k8s.nginx.org/v1

kind: VirtualServer

metadata:

name: cafe

spec:

host: cafe.example.com

upstreams:

- name: tea

service: tea-svc

port: 80

- name: coffee

service: coffee-svc

port: 80

routes:

- path: /tea

action:

proxy:

upstream: tea

requestHeaders:

pass: true

set:

- name: Content-Type

value: application/json

responseHeaders:

add:

- name: Access-Control-Allow-Origin

value: "*"

always: true

hide:

- x-internal-version

ignore:

- Expires

- Set-Cookie

pass:

- Server

rewritePath: /

- path: /coffee

action:

pass: coffeeThis sample configuration shows how you can embed header manipulation and URI rewriting in an action block under a conditional match, for even more sophisticated traffic handling:

apiVersion: k8s.nginx.org/v1

kind: VirtualServer

metadata:

name: cafe

spec:

host: cafe.example.com

upstreams:

- name: tea

service: tea-svc

port: 80

- name: tea-post

service: tea-post-svc

port: 80

routes:

- path: /tea

matches:

- conditions:

- variable: $request_method

value: POST

action:

proxy:

upstream: tea-post

requestHeaders:

pass: true

set:

- name: Content-Type

value: application/json

rewritePath: /

action:

pass: teaPolicies and IP Address Access Control Lists

In most organizations, multiple teams collaborate on application delivery. For example, NetOps is responsible for opening ports and filtering traffic, SecOps ensures a consistent security posture, and multiple application owners provision Ingress load‑balancing policies for multiple backend application services.

Because every organization delegates roles in different ways, the NGINX Ingress Controller gives you maximum flexibility in defining which teams own specific parts of the configuration, then assembles and executes all the pieces together. This supports multi‑tenancy while making delegation as simple as possible for the administrator.

In support of multi‑tenancy, NGINX Ingress Controller release 1.8.0 introduces policies and the first supported policy type: IP address‑based access control lists (ACLs).

- With policies, you can abstract traffic management functionality within a separate Kubernetes object that can be defined and applied in multiple places by different teams. This is an easier, more natural way to configure the NGINX Ingress Controller and brings many advantages: type safety, delegation, multi‑tenancy, encapsulation and simpler configs, reusability, and reliability.

- With IP address‑based ACLs, you can filter network traffic and precisely control which IP addresses (or groups of IP addresses, defined in CIDR notation) are allowed or denied access to a specific Ingress resource.

Note: In release 1.8.0, policies can be referenced only in VirtualServer resources, where they apply to the server context. In a future release we plan to extend policy support to VirtualServerRoute resources, where policies apply to the location context. We also plan to implement policies for other functions, such as rate limiting.

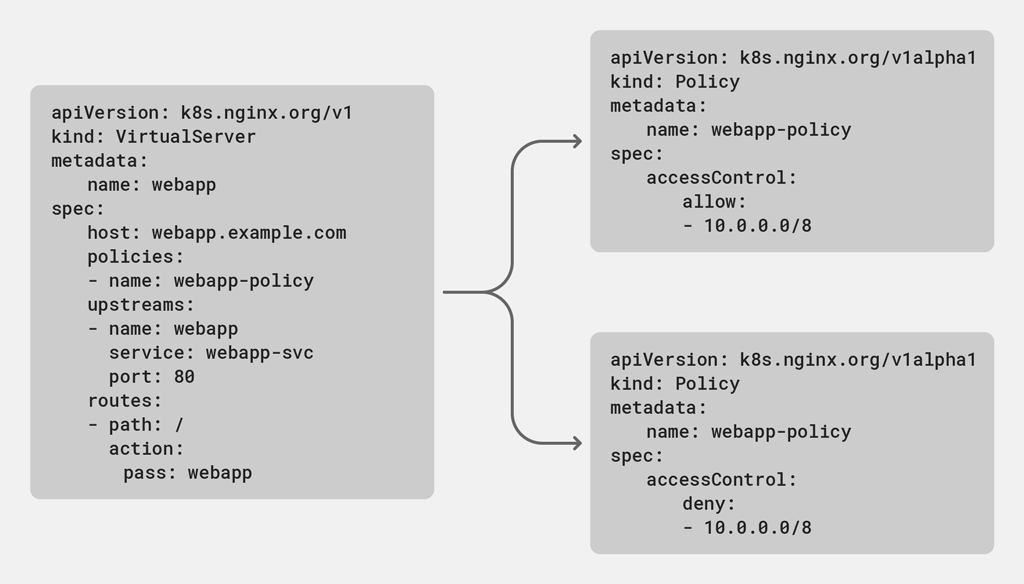

The following diagram illustrates how a policy can be used. The VirtualServer resource on the left references a policy called webapp-policy, and the configuration snippets on the right each define a policy with that name that filters (allows or denies, respectively) connections from the 10.0.0.0/8 subnet. Assigning the name to both policies means you can toggle between them by applying the intended policy to the VirtualServer using the Kubernetes API.

Other New Features

Readiness Probe

In environments with many Ingress resources (standard Kubernetes, NGINX, or both), it’s possible for Pods to come online before the NGINX configuration is fully loaded, causing traffic to be blackholed. In line with our commitment to production‑grade reliability, release 1.8.0 introduces a Kubernetes readiness probe to ensure that traffic is not forwarded to a specific Pod until the Ingress Controller is ready to accept traffic (that is, the NGINX configuration is loaded and no reloads are pending).

Enabling Multiple Ingress Controllers in NGINX Ingress Resources and Helm Charts

In previous releases, it was possible for multiple instances of the NGINX Ingress Controller to coexist on the same cluster, but only by using the kubernetes.io/ingress.class annotation in the standard Kubernetes Ingress resource to specify the target NGINX Ingress Controller deployment. In release 1.8.0, we have added the ingressClassName field to the VirtualServer and VirtualServerRoute resources for the same purpose. We have also updated our Helm charts to support the deployment of multiple NGINX Ingress Controller deployments.

Displaying the Status of VirtualServer and VirtualServerRoute Resources

The output from the kubectl describe command indicates whether the configuration for an Ingress controller was successfully updated (in the Events section) and lists the IP addresses and ports of its external endpoints (in the Status section). In release 1.8.0 we have extended the VirtualServer and VirtualServerRoute resources to return this information. For VirtualServerRoute resources, the Status section also names the parent VirtualServer resource.

As an app owner, you might find that the parent VirtualServer of a VirtualServerRoute resource has been removed, making your application inaccessible. You can now troubleshoot the situation by issuing the kubectl describe command for the relevant VirtualServer and VirtualServerRoute resources.

This sample command shows that the configuration for the cafe VirtualServer was applied successfully – in the Events section, Type is Normal and Reason is AddedorUpdated.

$ kubectl describe vs cafe

. . .

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal AddedOrUpdated 16s nginx-ingress-controller Configuration for default/cafe was added or updatedThis sample shows that the configuration for the cafe VirtualServer was rejected as invalid – in the Events section, Type is Warning and Reason is Rejected. The reason is that there were two upstreams both named tea.

$ kubectl describe vs cafe

. . .

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Rejected 12s nginx-ingress-controller VirtualServer default/cafe

is invalid and was rejected: spec.upstreams[1].name: Duplicate value: "tea"In the Status section of the output, the Message and Reason fields report the same information as in the Events section, along with the IP address and ports for each external endpoint.

$ kubectl describe vs cafe

. . .

Status:

External Endpoints:

Ip: 12.13.23.123

Ports: [80,443]

Message: VirtualServer default/cafe is invalid and was rejected: spec.upstreams[1].name: Duplicate value: "tea"

Reason: RejectedUpdates to the NGINX Ingress Operator for Red Hat OpenShift

- Policies – You can now leverage the NGINX Ingress Controller Operator to deploy policies by end users.

- App Protect scaling – You can use the Operator to manage scaling of the NGINX Ingress Controller with App Protect such that all instances within the same deployment can inspect traffic.

Resources

For the complete changelog for release 1.8.0, see the Release Notes.

To try out the NGINX Ingress Controller for Kubernetes with NGINX Plus, start your free 30-day trial today or contact us to discuss your use cases.

To try the NGINX Ingress Controller with NGINX Open Source, you can obtain the release source code, or download a prebuilt container from DockerHub.